Hive事务操作需要的一些配置

hive 更新表

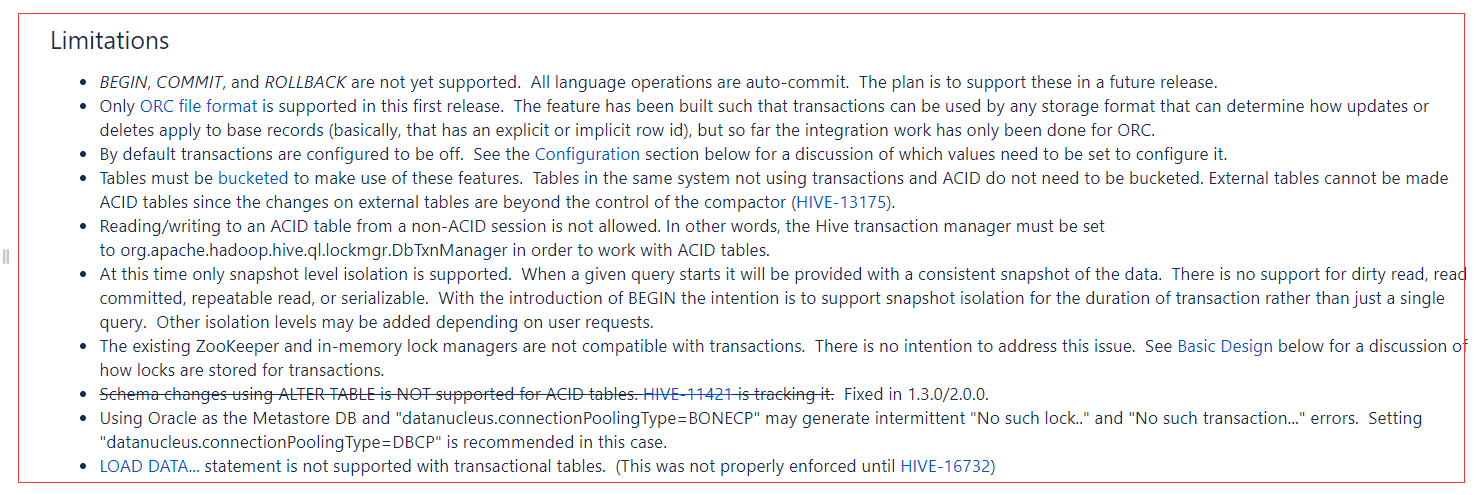

Limitations

- 只支持orc格式的数据事务

- 必须要有指定的配置

- 表必须分桶

- Table property中参数transactional必须设定为True(tblproperties(‘transactional’=’true’));

必须要有的配置

Client Side

- hive.support.concurrency – true

- hive.enforce.bucketing – true (Not required as of - Hive 2.0)

- hive.exec.dynamic.partition.mode – nonstrict

- hive.txn.manager – org.apache.hadoop.hive.ql.lockmgr.DbTxnManager

Server Side (Metastore)

- hive.compactor.initiator.on – true (See table below for more details)

- hive.compactor.worker.threads – a positive number on at least one instance of the Thrift metastore service, 一般设置为1

- hive.txn.manager – org.apache.hadoop.hive.ql.lockmgr.DbTxnManager(经过测试,服务端也需要设定该配置项)

例子:

CREATE DATABASE merge_data;

CREATE TABLE merge_data.transactions(

ID int,

TranValue string,

last_update_user string)

PARTITIONED BY (tran_date string)

CLUSTERED BY (ID) into 5 buckets

STORED AS ORC TBLPROPERTIES ('transactional'='true');

CREATE TABLE merge_data.merge_source(

ID int,

TranValue string,

tran_date string)

STORED AS ORC;

设置合并操作在TBLPROPERTIES 在表级别

CREATE TABLE table_name (

id int,

name string

)

CLUSTERED BY (id) INTO 2 BUCKETS STORED AS ORC

TBLPROPERTIES ("transactional"="true",

"compactor.mapreduce.map.memory.mb"="2048", -- specify compaction map job properties

"compactorthreshold.hive.compactor.delta.num.threshold"="4", -- trigger minor compaction if there are more than 4 delta directories

"compactorthreshold.hive.compactor.delta.pct.threshold"="0.5" -- trigger major compaction if the ratio of size of delta files to

-- size of base files is greater than 50%

);

设置请求级别的压缩选项

ALTER TABLE table_name COMPACT 'minor'

WITH OVERWRITE TBLPROPERTIES ("compactor.mapreduce.map.memory.mb"="3072"); -- specify compaction map job properties

ALTER TABLE table_name COMPACT 'major'

WITH OVERWRITE TBLPROPERTIES ("tblprops.orc.compress.size"="8192"); -- change any other Hive table properties